Cogito Blog

Interspeech 2017 – Robots, Deep Neural Networks and the Future of Speech

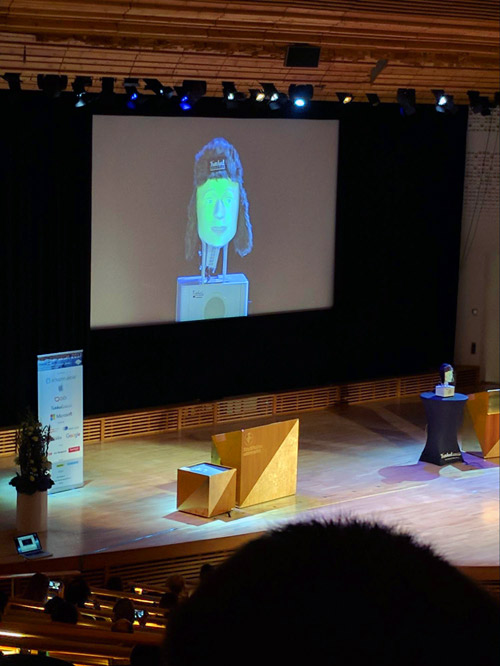

There was something special about the prospect of having Interspeech 2017, the most prominent speech science and technology conference of the year, in Stockholm. Perhaps because of its rich history of speech science, including being home to great researchers like the late Gunnar Fant, who was responsible for pioneering work on the acoustics of speech production and speech synthesis. On arrival to the conference at Stockholm University, the specialness was evident as the opening ceremony was held in the architecturally impressive Aula Magna building where the FurHat robot delivered conference logistical information to the audience. There ensued a compelling four days of speech and language processing paper presentations that provided a window into the newest innovations in voice, emotion and speech technology. Interested to learn more? Check out the top themes from this year’s interspeech conference below.

Fig 1. Giant tongue welcomes attendees to the University

Fig 2. FurHat robot hilariously delivering logistics information to the audience

Big players are taking notice

It has been three years since I was last at Interspeech, so one immediate observation I had was the massively increased presence of large commercial institutions. Google, Amazon, Apple and many others were visibly apparent upon entrance to the venue. Speech technology is a hot topic, with recent accuracy surges in core speech technologies leading to a wide variety of product offerings, and top speech researchers highly sought after by many companies. The larger companies present each held their own separate evening events to help attract researchers from the conference.

Fig 3. Google’s evening event

Maturity of ASR, TTS over other areas

One impact of the large commercial interest in speech technology has been to drive core areas to levels of advanced scientific maturity. Presentations on automatic speech recognition (ASR) and text to speech synthesis (TTS), besides having massively improved results compared to three years ago, had a good deal more experimentation and evaluation standardization, as well as larger and more suitable datasets than more emerging areas such as emotion recognition.

WaveNet as a new paradigm for speech synthesis

The WaveNet approach to speech and audio synthesis was introduced by Google last year, where rather than generating parametric descriptions of speech or gluing together units of speech, the actual audio waveform is directly generated by the deep neural network model. WaveNet’s status as a new paradigm for speech synthesis was further solidified during their session of talks in the large Aula Magna auditorium. Despite their impressive prototype, the naturalness of Google’s synthesis demonstrated last year is still proving hard to beat.

Using context to improve emotional valence recognition

There were quite a few presentations on the topic of emotion recognition during the event, demonstrating increased interest in the area. One point discussed was the challenge of effectively discriminating positive versus negative emotions from voice. At the same time, research using larger contexts combining word embeddings of lexical data, is a promising path resulting in real improvements.

The data problem

The problem of data availability and access was a consistent theme discussed during sessions and coffee breaks. Academic research groups in particular are finding it hard to compete with the large commercial teams due to data availability and the fact that state-of-the-art approaches require large, suitable datasets to work effectively.

Neural networks for everything

In case there was any doubt it, deep neural networks (DNNs) are proving to be the state-of-the-art modeling approach in almost every area of speech technology. However, there are considerable differences in the way people are using them. Some researchers simply applied standard DNN recipes to their problem, whereas others presented experimentation with sophisticated modeling architectures which are suited to the task at hand. For this latter group, it seems that the discipline itself is now “deep neural networks” rather than just the approach.

Robots at various points on the uncanny valley curve

Finally, it was hard not to notice the volume of robots around the venue. Some held your gaze as you passed, others tried to strike up a conversation and one, MiRo – the world’s first commercial biometric robot (see Fig. 4 below), begged you to pet it to improve its mood. These robots are certainly fascinating from a scientific perspective, but the commercial applications of them (for me at least) are still somewhat undefined.

Fig 4. The world’s first commercial biometric robot

What next?

Clearly core areas of speech technology like automatic speech recognition and text-to-speech synthesis have reached an impressive level of maturity, but there remains serious open questions around how to use the “voice interface” to create user experience’s which actually solve important problems for people. Cognitive and behavioral science still have an important role to play in designing and developing truly effective human-computer interaction scenarios. Also, it is likely that affective and emotion processing through voice will continue to gain momentum and with that increased attention challenging obstacles like robustly recognizing valence (i.e. positive vs negative emotions) will eventually be overcome. Such advances will, however, be dependent on the collection of large, well annotated audio datasets – something which is distinctly lacking from this sub-field right now. The challenge then will be combining these increasingly accurate sensing capabilities to improve and elevate the human experience in both work and personal scenarios.